Statistics vs. Computer Science: A Draw?

I’ve been thinking about what Big Data really is for quite a while now, and am always happy about voices that can shed some more light on this phenomenon – especially by contrasting to what we call statistics for centuries now.

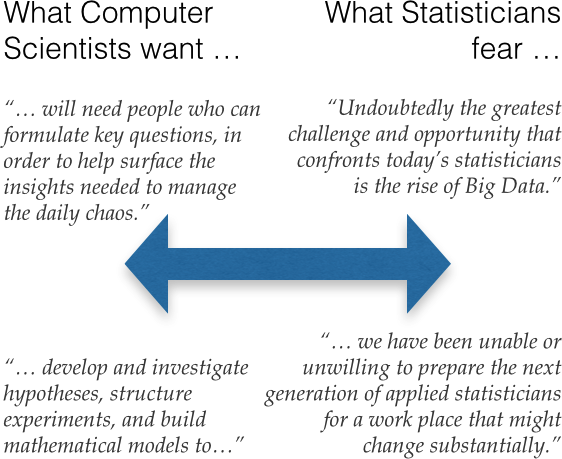

Recently I stumbled over two articles. The first is from Miko Matsumura, who claims “Data Science Is Dead“, and does largely lament about data science lacking (statistical) theory, and the other one is from Thomas Speidel, who asks “Time to Embrace a New Identity?“, largely lamenting that statistics is not embracing new technologies and application.

In the end, it looks like both think “the grass is always greener on the other side” – nothing new for people who are able to reflect. But there is certainly more to it. Whereas statistics is based on concepts and relies on the interplay of exploration and modeling, technology trends are very transient, and what today is the bleeding edge technology, is tomorrow’s old hat.

So both are right, and the only really new thing for statisticians is that we do not only need to talk to the domain experts, but we also need to talk to the data providers more thoroughly, or start to work on Hadoop clusters and start using Hive and HQL …

Respectfully, I do not think that is an accurate to paraphrasing of Thomas Speidel. Statistics is designing new techniques all the time. The quote below and to the right of the arrow is right.

Also, I do not see Big Data as a big challenge for statisticians. I do not see computer scientists as structuring many experiments, etc. I think the challenge for statisticians would be the overconfident anti-statisticians.

Sorry to be so disagreeable. I find merit in your last paragraph and wish you well.